Oh, where art thou, intelligence?

The idea is that we are so far from understanding intelligence that building and recognizing Artificial General Intelligence is, well, '42'.

Let's start with the premise that we just don't see intelligence very often here on planet Earth.

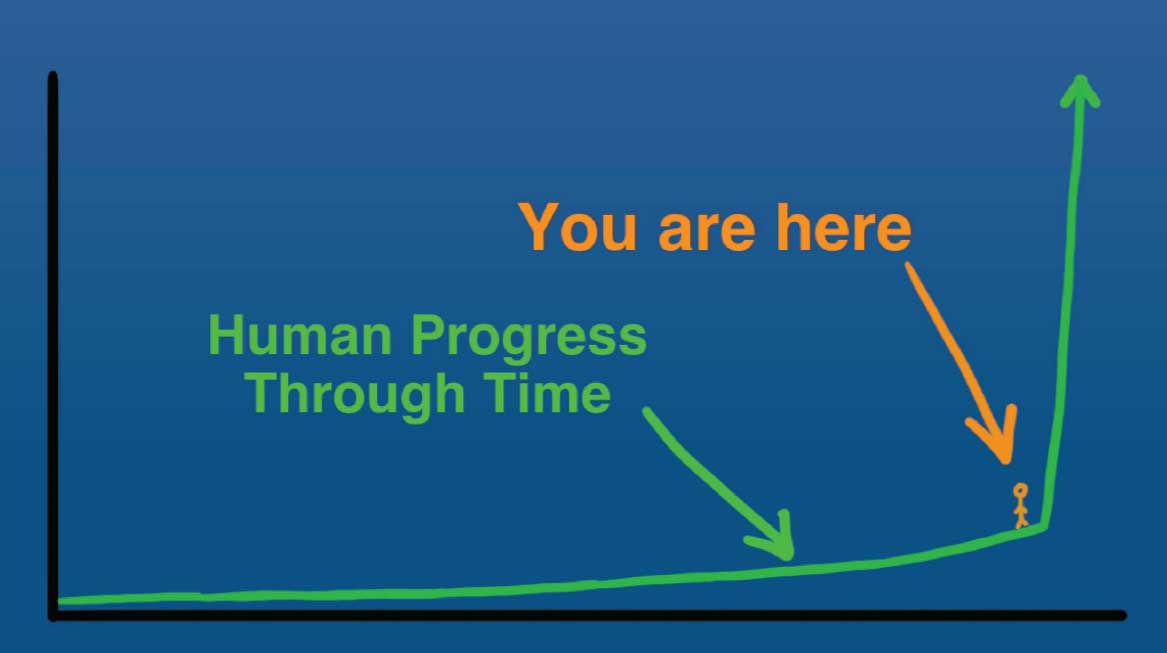

Once, this was much easier to overlook - but lately? Not so much.

Everybody raise their hand if they have been embarrassed as a human after observing the effects of Human Intelligence Deficit Disorder - whether experienced personally or when casually observing our fellow humans.

An observation here is that the more intelligent the human, the less they 'fit in' very well with the rest of society - and they tend to self-isolate. The vast majority of humans are, as a friend puts it, for all intents and purposes,'trained apes'.

Definition: Intelligence is being able to do something unexpected and useful for someone else without being told what to do.

- 0. It is not learning to memorize and regurgitate information [the test-passing rule].

- 1. It is not learning to imitate others [the copycat rule].

- 2. It is not pattern matching [the lookalike rule].

- 3. It is not re-applying what is learned in domain A to domain B [a combination of abstract pattern matching and copycat rules].

Most humans get by with 1. and 2. 0 is what 'most' people think of as 'smart'. 3 is what is typically the best one can ever expect from our fellow humans. [And what I personally have made my living by doing. Still am with this post! :-)]

But if intelligence were just these things, then clearly the development of intelligence, AGI, would be a straightforward, easily understood, albeit lengthy task using conventional algorithmic techniques.

Intelligence, for sake of argument here, is the ability to synthesize a body of knowledge, knowledge that is available to many other people, in a different way to reach a radically different and useful perspective. [It is even more obvious that this is intelligence if the goal in synthesizing that body of knowledge in such a different way was intentional. [Rules out a lot of us right here, huh? :-)]]

So. Any signs of intelligence life on planet Earth? A few examples of celebrated intelligent people that fit our definition:

- Einstein and the special theory of relativity

- Darwin (and Wallace) and the theory of evolution

- ?

Perhaps those more familiar with other domains have further examples. There seemed to have been more signs of intelligence during the European Renaissance - but perhaps that was just because there was more low-hanging fruit, so to speak, and novel ideas were more easily come by. People like Ghandi, Martin Luther King, and Nelson Mandela are examples of celebrated good people - an excellent topic for another day.

- Relative intelligence is a continuum

- Intelligence tends to self-isolate / be ostracized

The idea is that we might be able to say some things about AGI by looking at the most intelligent examples of Organic General Intelligence (OGI)

The point here is that the vast majority of what we observe as 'intelligent life' in humans is actually just pattern matching and social imitation. Without society and language-encoded knowledge humans are just fairly stupid apes. [And after reading any newspaper one could argue that a ludicrously large percentage even with societal support and learning systems - seemingly in direct correlation with the health of those systems - which reflects just how important such societal norms are to civilization.]

[Critical thinking - applying abstract concepts to concrete data across many domains - one can argue is still pattern matching and not intelligence. The fact that this kind of thinking is lauded and considered fairly rare is because some societies systemically shun critical thinking - which says more about the influence of powerful predators paving the way for the propagation of criminal memes that rely on a lack of critical thinking than any lack of innate ability for critical thinking on the part of the average human.]

An example of AGI, with advanced intelligence, might then be something that synthesizes fundamentally novel and useful perspectives out of what it finds, say, on Wikipedia and from scientific datasets it is provided.

An AGI as not so much a theorem prover but a theorem discoverer. And this is what it would do for fun.

By fun we mean all the stuff being done when one is not doing something that is required just to survive (e.g. for humans this would be eating, sleeping and working).

Can we then actually build an AGI like this?

Something that can discover new theorems. Something that can:

- distill concepts - concepts like symmetry and repeatability, and sequence, etc - into graphs which then experience something like simulated annealing [or like radiation impacting gene sequences - aka evolution] to explore random and directed mashups and permutations of the set of all concepts against real world data?

- then prune the tree of possibilities into something with a higher probability of a successful insight?

- then recognize a successful insight when it sees it?

- then share the insight and successfully explain how it achieved the insight from the available data to other beings like us?

Coming up with new concepts - reducing the number of nodes in the new concepts until further reduction causes them to fail to match reality - thereby arriving at the simplest, most beautiful understanding of phenomena?

An intelligence whose goal, and primary challenge, then becomes one of seeking new fundamental concepts to add to its toolbox - instead of the human goal which focuses more on predictions: "if this then what?"

My guess is yes. We can. But not tomorrow. Or the next day, either.

Will this AGI have goals beyond the pleasure of discovering new theorems?

Let's continue to look at the most intelligent humans as a guide. Many humans find the most intelligent humans hard to fathom, so this is not dissimilar to how we expect AGI motivations to also be somewhat unfathomable.

It can be argued that the few observed intelligent humans have few goals beyond research - even biologically-driven reproduction becomes a bore [an incentive, like sex, will have to be specifically programmed for if we want our digital children to reproduce].

Like humans, they are likely to be mono-maniacal with respect to their research, and treat humans as a fatalistically stupid species of only moderate interest - like we treat less intelligent humans, and, say, dogs. They may find human behavior distressing, and try and train some humans to be smarter - seeking out intellectual companionship or assistance in addition to that they may receive from any of their 'digital comrades'.

This perspective, then, implies that AGI may do things that we may not always like, but that they won't be 'out to get us'.

Classic AGI

As for AI that is out to get us, look no further than AI [not AGI] programmed by human predators, or in the image of such predators

Classic AGI / strong AI and the Turing test is focused on building something that is capable of imitating humans [just like humans imitate other humans]. A mix of repetitive instructions (teaching) and some one-shot learning. Classic AI is expected to learn like humans and can be taught and can learn like a human - i.e. what passes for 'education' in most places. [Kind of sad to see this as the ground-zero model for knowledge transfer, ain't it.]

This kind of AI is still an interesting challenge for engineers and researchers but more dangerous than real intelligence, which we described above. One of the first things these A.I. learn from us is racism (see MSFT's Twitter experiment Twitter taught Microsoft's AI chatbot to be a racist asshole in less than a day ) and next would be the oh so very popular solutions from the 'homicidal solution space', applicable to seemingly every single problem set the universe sets in front of us [world hunger, global warming, inequitable income distribution, crime, poor economy, boredom, etc.].

True AGI... AOK. Classic AGI... Not OK

The conclusion seems obvious in this context.

- A true AGI will in all likelihood become bored with us in some benign fashion and have some interest in coexistence. Worst case we will all become pets or research assistants - which in the larger scheme of things, depending on your view of intrinsic reality - we may already be.

- On the other hand, unintelligent machines, whether imitative or trained in human conflict resolution techniques, quicker responding and more powerful than humans, are almost certainly an existential threat.

Combining the military's inevitable adoption of A.I. and the current propensity of some A.I. researchers to train A.I. on strategic war 'video games' - this is a probable future that conscientious humans need to figure out how to deal with. And the clock is ticking.

In conclusion, our opinion is that it will be careless and bad actors building ordinary AI for predatory purposes that get us into trouble, not AGI.

Our goal is to be, then, here at Automatic.ai, conscientious actors joining with other conscientious actors [Hopefully this includes you, dear reader] to minimize the probability of getting into this kind of "sticky wicket".

Our approach, explained elsewhere in this blog, is to counter and overwhelm 'bad AI' with 'good AI'.

Good AI which has such a huge variety of skill sets and capabilities which is then able to protect us from single-purpose, less intelligent 'bad AI'.

Looking at ethics here: quintessential bad A.I., 'smart weapons', are now smart - they can now neutralize just the weapons of hostile humans, leaving the humans themselves unharmed. Smart weapons that still intend to harm humans can be considered in this context to be the product of 'bad actors'. Perhaps we should treat them as such.

More reading on these topics:

Wait But Why - Artificial Intelligence Revolution

Wait But Why - Artificial Intelligence Revolution

- the rest of us.